New robots.txt Tester Launches in Google Webmaster Tools

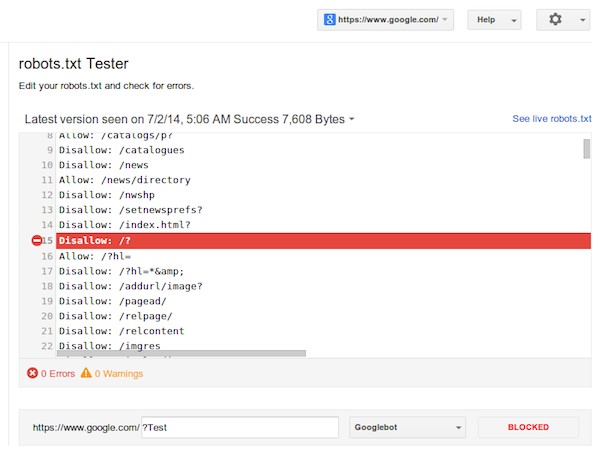

The robots.txt testing tool in Google Webmaster Tools has simply received an update to focus on errors inflicting Google to not crawl pages on your web site, allow you to edit your file, take a look at if URLs are blocked, and allow you to read older versions of your file.

If Google is not crawling a page or a part of your web site, the robots.txt tester, located under the Crawl section of Google Webmaster Tools, can currently allow you to take a look at whether or not there is a difficulty in your file that is obstruction Google. (This section of GWT used to be known as Blocked URLs.)

“To guide your approach through difficult directives, it’ll highlight the precise one that LED to the ultimate call,” wrote Asaph Arnon, Webmaster Tools team on the Google Webmaster Central journal. “You will build changes within the file and take a look at those too; you simply ought to transfer the re-creation of the file to your server later on to form the changes become.

“Additionally, you will be ready to review older versions of your robots.txt file, and see once access problems block USA from locomotion,” he continuing. “For example, if Googlebot sees a five hundred server error for the robots.txt file, we’ll typically pause any locomotion of the web site.”

In saying the news on Google+, Google’s John Mueller wrote that notwithstanding you’re thinking that your robots.txt is ok; you ought to check for errors or warnings.

“Some of those problems are often delicate and simple to miss,” he wrote. “While you are at it, additionally see however the vital pages of your web site render with Googlebot, and if you are accidentally obstruction any JS or CSS files from locomotion.”